Tokens contain a lot of information and even more the relationship between tokens. Making sense of this information is the hidden knowledge living within tokens. Unlocking this knowledge is the next evolution step for design tokens.

This article is part of the Inside Design Tokens series, which splits into 10 articles:

- Definitions & Traits

- Features

- Modeling & Communication

- The Three Class Token Society

- Put your Tokens on a Scale

- Token Specification

- Naming

- Theming

- Internals

- The Hidden Knowledge <– this article

The insides design tokens series unveiled a ton of data accumulated around design tokens. From organizing them within contexts and domains (birds-eye view) to understanding the scales at which tokens are aligned, with their value permutations for themes and finally all details stored with design tokens.

That mass of information may even be overwhelming, better to dissect the parts and see how they align with standards and tooling in the industry.

Information vs. Knowledge

The term “knowledge” is not properly well defined (Frey-Luxemburger, 2014, p. 13ff) multiple, varying exemplifications exist. The one described here will not adequately do justice to the term “knowledge” but enough for the situation at hand. The hierarchical information theory approach (Rehäuser & Krcmar, 1996, p. 6; Bodendorf, 2006, p. 1) understands four levels and instructions how to transform from one level to another.

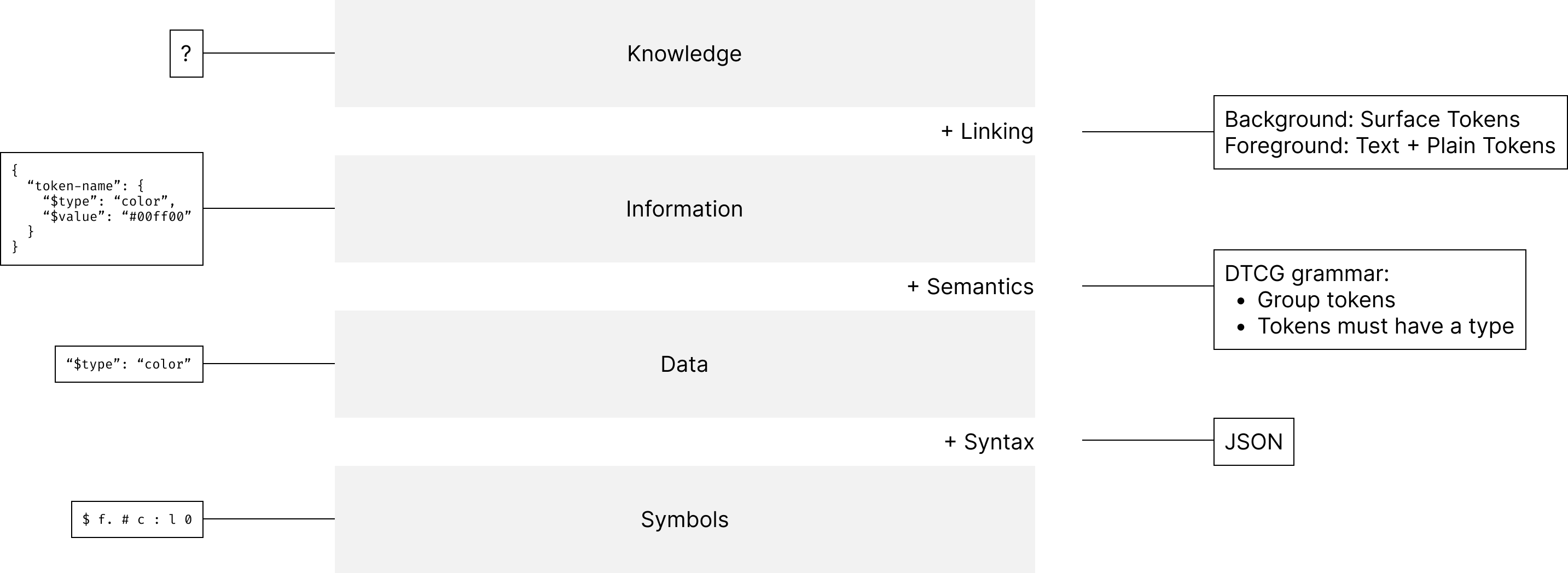

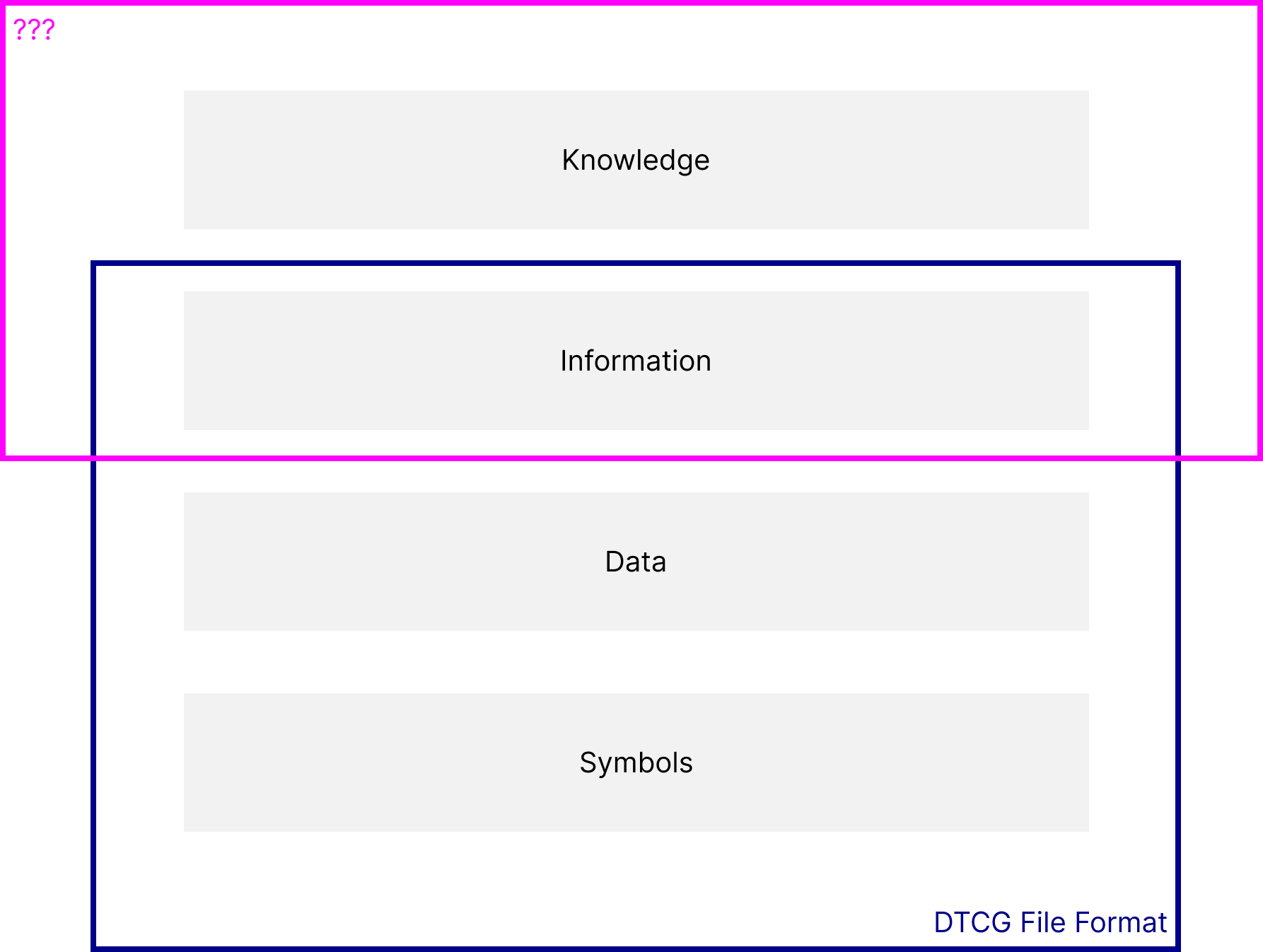

At the lowest level is a set of symbols and combining them with syntax (eg. JSON) turns them into structured data. Marking with semantics in a given context (eg. DTCG grammar) provides meaning and turns data into information. Linking information creates knowledge.

James Nash and Louis Chenais (2022) give an introduction to the design tokens file format and earlier Nash (2022) presented the future of design tokens in which he shows some extended use-cases. The format covers the information part in the information theory approach picture.

What’s left is the part linking this information to create knowledge that can be utilized by humans and machines.

The Confusion of Today

The absence of bespoken information linking, leads to confusion happening today. Following is a list of selected cases.

Token Matching

Do the tokens fit together?

surfaces.layerintent.action.base.text

The answer is no. Either use intent.action.base.background as the background or use intent.action.base.plain as foreground – but how should token users know this?

Design Systems try to combat this with on-surface-layer tokens, but this is hardly to impossible scalable. Documentation can help guide the proper usage of tokens, but this assistance must move to the finger tips of engineers or visually pop up for designers; a desired shift from marking the wrong tokens to suggesting alternatives.

Spacing is Off

You’ve used a token that matched the value for the given design, everything is looking good. But then the theme is exchanged and what once was a harmonic organized layout is now off and looks destroyed. The token was chosen based on the value, but not on the purpose it was created for. How to adequately select the right token for the given context?

Tooling Hacks

Tools, for example Specify and Design Token Plugin for Figma, invented a grammar in terms of how pages/and or nodes in Figma must be named in order for their products to find the information. Proprietary hacks are necessary for the vitality of these products, but in these cases are unwanted hacks due to lacking support for native design tokens in spoken design editor. While this problem is being worked on with the design token specification and the entire industry waiting for Figma to support design tokens, the next sort of these problems is around the corner.

Oppressive Tooling Conventions

Tools (products, such as Specify, Zeroheight, Supanova, Knappsack, Token Studio, etc.) make the rules to the extend they build inflexible boundaries and processes, that are seldom adaptable to the companies using them. Examples:

- Some tools export tokens from Figma beginning with a

.in their names, others don’t. Neither of these tools is right or wrong, it’s a configuration option that should neatly integration with other tools running the pipeline. - In Tokens Studio I made a set of sizing tokens but then wasn’t able to assign them to a border (because they weren’t sizing tokens marked for borders?). The usage was correct according to system design, but the tool prohibited this due to its conventions.

- Tools do understand tokens based their types and are great to provide assistance around, they do however not understand the topics in which they are grouped, which provide the necessary boundaries in which they are valid.

As for the case before, it is vital for tools to apply such hacks for short time-to-market and other business conditions or priorities but need adjustments.

Config is Too Complex

Problems mentioned above are manageable with excessive configurations. That’s the idea with Theemo: Providing the tooling pipeline but leave the description of the design language to its users.

However, with Theemo the configuration is massive and complex. Here is a snippet:

function fileForToken(token) {

let fileName = '';

// individual treatment for text style tokens

if (token.type === 'text') {

fileName = 'typography';

}

// all others

else {

// 1) LOCATION

const parts = token.name.split('.');

// special location ifm

if (parts[0] === 'ifm') {

fileName = 'ifm';

}

// let's see for the others

else if (parts.length > 3) {

fileName = parts.slice(0, 3).join('/');

} else {

fileName = parts[0];

}

// 2) ADD MODIFIERS

if (token.colorScheme) {

fileName += `.${token.colorScheme}`;

}

if (token.transient === true) {

fileName += '.transient';

}

}

// add folder to which token set this one belongs

const folder = token.name.startsWith('ifm') ? 'website' : 'theme';

return `${folder}/${fileName}`;

}-> Read the full config. The sheer length and complexity is reluctant for people to use.

I’m flippantly saying tools like Specify, Knappsack, Zeroheight, et al. are the “Macs” amongst design tokens tools and Theemo is the “Linux” =)

The industry is yet to seek the sweet spot for a nice configuration.

Superficial Tool Onboarding

Tools took liberty in providing onboarding with their tools in combination to help their users get started with design systems/design tokens. A great idea to market their product and supporting their users. The provided choices and “topics” for that onboarding are rather disappointing. When following these, design systems end up with colors, dimensions, fonts and cubic beziers, etc. (= token types). That’s as much as helpful as asking an engineer what a feature consists of and the answer is: classes, functions, interfaces, variables and modules.

The question arises: When three companies use tool x, would they create three times almost-the-same design system? Tools aligning around the technology they built for representing the technical token types. While this being the technical foundation for a tool, the hard work is only about to begin.

The Challenges Ahead

Linking information plays a big role in finding solutions for the before mentioned cases. Available information are tokens (with their internals) stored in DTCG format, token specifications formalizing the language for a particular topic within your system plus other information specific to your system. The next step is to describe a grammar for linking information that encodes a human readable information for machines.

Designing a Grammar to Express your Systems Behavior

Systems behavior is defined by rules that restrict all possible behavior to only the allowed behavior, explaining the relationships between subsystems.

Taking the initial confusion case about matching color tokens with intent.action.base.text on surfaces.layer. The human readable version for the designed behavior is:

Background: Surface Tokens

Foreground: Text + Plain TokensNow to express this in code for machines:

{

"type": "pairing",

"background": {

"matches": "layout.surface.*"

},

"foreground": {

"matches": [

"text.*",

"*.plain.(*.)?text"

]

}

}Formalizing one rule of the system (exemplary format), the expectation is for many to exist. The type can be homogenous or heterogenous, no enforcement of working with one token type. For example another rule might define how a shape is composed, to test a combination of tokens for a particular element:

border-style: shape.stroke.*

border-width: shape.stroke.*

border-radius: shape.radii.*

border-color: shape.stroke.* + *.borderAnother set of rules would restrict tokens to only the purpose they were designed for, for example spacing tokens:

# Primitives (inline)

padding-inline: layout.spacing.primitive-padding

gap: layout.spacing.primitive-gap

# Containers (block)

padding-inline: layout.spacing.container-padding

column-gap: layout.spacing.container-gap-inline

row-gap: layout.spacing.container-gap-blockThe caveat is differing between two classes of elements: inline and block elements. MDN explains the layout for the normal flow and Andy Bell and Heydon Pickering align boxes on the flow and writing axis. In order to properly interpret and validate the example rules, it would require an identification about the element they are attached to. Other rules need other preconditions to be met, which would require arbitrary mechanism to run and evaluate.

A grammar to express system behavior links the available information and gives visibility to the otherwise hidden knowledge. Manifesting knowledge unlocks new capabilities and following sections explore some of them.

Linting

Obviously the first scenario is linting to move assertions of proper token usage to the fingertips for engineers or let it visually pop up for designers.

Great available tools are Stylelint and Designlint. I would favor a plugin for stylelint to be configured with the grammar above. For designlint I already asked for a config file, which should be the same one as for the stylelint plugin.

Resilient Tool Configurations

Tools are for the token pipeline and description of the design language belong to its users (while providing sensible defaults), including:

- Tools to organize tokens by topics with heterogenous types instead of organizing by homogenous types.

Do NOT assume that topic name is the prefix of a token name – thank you. - Tools to provide visual editors for system behavior

- Flexible options for pushing tokens through the pipeline

That would shift focus from a token type orientation towards the unique organization for one’s design system.

Onboarding Dialog

Onboarding experience can improve drastically upon that. In a dialog design, tools can ask their users about the topics that describe their design language. Another question would be to ask the level of assistance: “are you new to design system, would you want to see some inspirations?” and then present a couple of organization strategies. On a second level can ask what are the contents of a topic, which design tokens exist and which rules are they governed by?

Organization strategies might be:

- Elevation (Topic): surfaces + shadows

- Layout (Topic): surfaces; Shapes (Topic): strokes + radii + shadows

To showcase surfaces can be hosted in different topics.

Not only creates this a much more customized and personalized experience, by asking these questions tools acquire the hidden knowledge between design tokens and can offer services atop, such as generating the documentation for these rules or hosting them for integration into design lint.

Interactive Token Exploration

Decision charts for picking the right token is great for token users but cumbersome to maintain for token designers (especially in the early stages). Atlassian turned this into a playful experience with their token picker. In addition to find the right token, the picker even recommends pairings with other tokens.

Additional information such as recommendations can live in the grammar for design system behavior and expect their usage in creative ways.

A Starting Point for AI

As an attempt to manifest the hidden knowledge and make it available to machines opens up the menu for AI within design system tooling. Understanding the rules at which a particular system is operating can provide tailored suggestions and recommendations to improve the system. Tools can better assist teams in building up their design system, for example: “teams using this topic organization also placed shadows in elevation” or “We analyzed your documentation text and detected a rule for pairing tokens, shall we add it?

Recap

The series started with exploring the environment in which design tokens are used with traits, principals and agents and features to offer customizations for users.

The middle part begun with modeling & communication in comparison to the three class token society. Tokens are placed on a scale and a token specification manifests the scalability. All are the ingredients to the naming design tokens with ease.

Theming is a way to swap out different brands or versions of one and support features through with switches for multiple token values. Tokens store a lot of internals to support system decisions when pushed through the pipeline.

Finally, all comes together when the information encoded in tokens is linked up to create knowledge supporting your users in advanced and creative ways.

References

- Atlassian. (n.d.). Design tokens. Atlassian Design System. https://atlassian.design/components/tokens/all-tokens?isTokenPickerOpen=true

- Bell, A. & Pickering, H. (n.d.). Boxes. Every Layout. https://every-layout.dev/rudiments/boxes

- Bodendorf, F. (2006). Daten- und Wissensmanagement (2., aktualisierte und erweiterte Aufl.). Heidelberg: Springer Verlag.

- Chenais, L. & Nash, J. (2022, November 19). An introduction to the design tokens file format. In Schema 2022. https://www.youtube.com/watch?v=ssOdzxZdg58

- Mozilla Developer Network. (n.d.). Block and inline layout in normal flow. https://developer.mozilla.org/en-US/docs/Web/CSS/CSS_flow_layout/Block_and_inline_layout_in_normal_flow

- Nash, J. (2022, September 21). The Future of Design Tokens. In Into Design Systems. https://www.youtube.com/watch?v=Ots630OxRwE

- Rehäuser, J. & Krcmar, H. (1996). Wissensmanagement im Unternehmen. In G. Schreyögg & P. Conrad (Hrsg.), Wissensmanagement (Bd. 6, S. 1-40). Berlin: de Gruyter.

- Frey-Luxemburger, M. (2014). Wissensmanagement – Grundlagen und praktische Anwendung. Wiesbaden: Springer Fachmedien.